Decoupling decoupling from rationality

Within the nebulous online space connected to the rationalist community a concept for understanding disagreement seems to have emerged: The distinction between high-decouplers or low-decouplers (also called decouplers and contextualizers).

The story goes something like this: Some people are more rational than others. These more rational people are better at thinking because they're able to isolate claims and focus on details. Other, less rational people bring up a bunch of unnecessary disconnected "context" and vibes, flailing about, making everything emotionally charged, political, and irrational.

A post that was shared on the slatestarcodex subreddit summarized it as this:

High-decouplers isolate ideas and ideas from each other and the surrounding context. This is a necessary practice in science which works by isolating variables, teasing out causality and formalizing and operationalizing claims into carefully delineated hypotheses. Cognitive decoupling is what scientists do.

Being aware of how some people work like this while others don't could supposedly help you understand why people disagree. Or even better, why people disagree with you! Or even best: Why people who disagree with you are wrong!

To lay my cards on the table: I think this is a perversion of what decoupling originally meant. In this post I aim to first summarize the model of rationality that this concept came from, and then track it's migration through the rationalist blogosphere the best I can, and along with that critique how it has mutated and how this new version of it can easily be misused. I’ll try to end on a positive note.

Thinking fast and slow, and slower

Keith Stanovich is an eminent researcher who has been at the forefront into research into reasoning in what is called the heuristics and biases research programme in psychology. He and his colleague Richard West introduced the terms System 1 and System 2 that Daniel Kahneman used in his very popular book Thinking Fast and Slow1 though Stanovich has long prioritized the terms Type-1 and Type-2 processing instead. These terms exist within the broader umbrella of "dual process theories" in psychology that differ on details but share the essential idea of some type of thought being faster and more automatic and other types of thought being slower and more deliberate.2

(I'll talk about Stanovich as one person in this post since I'm basing most of this on the book Rationality and the Reflective Mind where he is the sole author, but lot of the time you can think of "Stanovich" as stand in for Stanovich et al.)

Within the “heuristics and biases” research tradition the boundaries between types of cognitive information processing are drawn in order to make sense of mistakes in thinking. Type-1 processing/thinking is characterized by being faster, more associative, more holistic, more contextualized and, crucially, is less demanding of cognitive/attentional resources. Type-2 thinking is instead slower, rule-based, decontexualized and more demanding. Type-1 thoughts emerge in your consciousness because your unconscious is doing a bunch of competing parallel processes regardless of whether you want it to or not. They can be automatically triggered by stimuli in the environment. Type-2 thinking instead is virtually synonymous with conscious thinking, and happens step by step, serially.

Mistakes in reasoning often happens because our mind has a preference for fast easy Type-1 thinking, even when we shouldn't have. This happens because the mind is basically "miserly" with it's cognitive or attentional resources - or in simpler terms: We're kinda lazy and inpatient. We avoid thinking harder or longer about problems than we have to and if a solution seems good enough we'll take it and move on.

There are a myriad of examples of these errors, both in every-day life and in the literature. A classic example from Kahneman and Tversky is called “Linda the bank teller”. Participants in the experiment are told the following:

Linda is 31 years old, single, outspoken, and very bright. She majored in philosophy. As a student, she was deeply concerned with issues of discrimination and social justice, and also participated in anti-nuclear demonstrations.

They're then asked which is more probable?

1. Linda is a bank teller.

2. Linda is a bank teller and is active in the feminist movement.

People frequently chose option 2 even though it's probabilistically impossible for it to be more probable than 1 (since 2 is a subset of 1). Kahneman calls this the representativeness heuristic, or in other words Type-1 thinking is built around which exemplars feel representative and doesn't care about stuff like probability or logic. Linda feels more like an activist-feminist type than a bank-teller. It's easy to not even register the impossibility of the conjunction being more likely than the set it is a subset of, because you already feel like you have an answer. This type of heuristic thinking is what comes naturally to us; it is the type of thinking we as humans are evolutionarily built for or have a lot of practice at (Stanovich calls it "overlearned"). To think correctly about this problem we need to take a step back and think slower.

Even if you got the Linda problem right immediately I think you can sort of "feel" how the second option could pull you in. This overly-associative, impressionistic, tendency Stanovich dubs one of The Fundamental Computational Biases of Human Cognition. Our fast Type-1 thinking contextualizes too much, it's leaky, things that should remain separate gets mixed up.3 To ensure that doesn't happen you need to rely on Type-2 thinking.

Another example.

Consider the following syllogism:

Premise 1: All living things need water.

Premise 2: Roses need water.

Conclusion: Therefore, roses are living things.

So, is the syllogism valid? A lot of people get this wrong because their thinking about whether the syllogism holds is corrupted by their knowledge about roses. Roses are living things! That conclusion is good enough, so your thinking stops there. The name for this one is belief bias.

To show that this isn't just a case of syllogisms being sort of abstract and hard to think about Stanovich (2004) also shares this one, identical in form:

Premise 1: All insects need oxygen.

Premise 2: Mice need oxygen.

Conclusion: Mice are insects.

Here it's instead extra easy to detect that the syllogism is invalid since your knowledge that mice aren't insects tips you off that something is wrong here.

Kahneman's book was a best-seller and I imagine that this description of the biases and errors of System 1 or Type-1 thinking is somewhat familiar for many readers. Some may also be familiar with the idea that these cognitive biases are actually less irrational than they seem, and are often more of trick based on how the questions are framed.4 I don't intend to get into The Great Rationality Debate around of whether humans are inherently irrational or rational here but it's useful to know that Stanovich is very clearly on the side that says that these experiments, and the biases they've revealed, mean that humans are irrational. His vision of rationality sees it as related to using careful step-by-step Type-2 thinking to overcome potentially biased Type-1 thinking. That doesn't mean responding with fast Type-1 thinking is in and of itself irrational or anything - our automatic thoughts are often well calibrated - just that it is generally the lack of slow thinking that leaves us vulnerable to irrationality.5

An important aspect of this vision of rationality is that it sees it as distinct from "intelligence", or at least "IQ". Another way to put it is that Stanovich's research project is interested in distinguishing between these things, which means taking a more narrow view of IQ as "cognitive capacity" (as opposed to some more folk psychological ideas about intelligence). Rationality then is not simply "thinking good" and is not primarily about ones cognitive capacity, it is instead about not being clouded by biases. Cognitive biases are sometimes surprisingly weakly related to IQ, but it also differs based on which test you use and which bias or heuristic you're talking about. Making sense of this variability in how different biases relate to IQ are one of the main reasons Stanovich has developed what was previously System 2 into a more granular model.

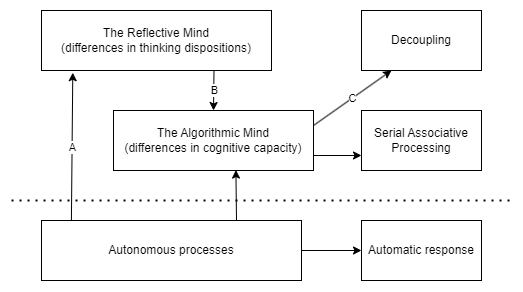

The updated model divides Type-2 thinking/System 2 into two parts and differentiates between different types of serial Type-2-processing. One part he calls "the algorithmic mind", which corresponds to fluid intelligence/cognitive capacity. For most purposes though, it's both sufficient and more clear to think of it in terms of working memory capacity. Just as it's hard to keep a long phone-number in your head without slipping up "the algorithmic mind" is limited in how much it can process and for how long.6

The other part he calls the reflective mind. This basically represents a metacognitive or executive level that regulates thinking. It has access to goals, opinions, general knowledge. It can be said to both be related to a form of "crystalized intelligence"7 as well as components that are outside the scope of intelligence tests like "thinking dispositions". People differ in how much they like thinking and how open they are to new perspectives etc. Some people love puzzle games and some people hate them. That sort of thing will affect how likely you are to engage in slow effortful thinking as opposed to going with your first hunch.

He also divides the type of responses we have even when Type-2 thinking happens: Serial associative cognition and, finally, the cognitive operation of decoupling.

"The cognitive operation of decoupling, or what Nichols and Stich (2003) term cognitive quarantine, prevents our representations of the real world from becoming confused with representations of imaginary situations." - Stanovich, 2011, p. 49.

This cognitive operation enables intentional hypothetical thought. The mind creates "second order representations" that do not directly interfere with actions. He borrows the term from a model of what children are doing when they pretend, but Stanovich expands the idea. Decoupling is not just actively pretending something is different from what you previously know, but also isolating aspects of some task or mental object in order for them not to be influenced by Type-1 biases. Much like we can focus our vision in a way that intentionally separates foreground and background, decoupling can separate some abstract feature from it's surrounding context, and from prior associations. In the rose task above decoupling can be framed as separating the abstract form of the syllogism from the knowledge we possess about roses (or alternatively as "pretending we don't know about roses"). This cognitive operation of decoupling is demanding of our working memory, especially if it's going to be sustained over time.

Decoupling then is necessary for hypothetical thought, which is a big deal:

“Hypothetical thinking is the foundation of rationality because it is tightly connected to the notion of autonomous system override (...) Type 2 processing must be able to take early response tendencies triggered by TASS [the autonomous set of systems] offline and be able to substitute better responses.” - Stanovich, 2011, p.47

Stanovich suggests better responses often come from a sustained process of cognitive simulation. The reflective mind is what detects the need to start this override in the first place, but just detecting it isn't always enough. Let's look at another classic task within the bias literature. The Wason Selection task:

“The participant is shown four cards lying on a table showing two letters and two numbers (A, D, 3, 7). They are told that each card has a number on one side and a letter on the other and that the experimenter has the following rule (of the “if P, then Q” type) in mind with respect to the four cards: “If there is an A on one side then there is a 3 on the other”. The participant is then told that he/she must turn over whichever cards are necessary to determine whether the experimenter’s rule is true or false.” (From Stanovich & West, 1998)

Think about it for a second. Most people get this task wrong.

The automatic Type-1 response here is to try to confirm the rule with the A-card (correct) and the 3-card (incorrect, since other letters may also imply 3), and neglect to turn over the 7-card (if there's an A on the other side that would disconfirm the rule). This abstract task too would require decoupling according to Stanovich. "If the rule is this and I were to turn over that card, would that disconfirm the rule?" Maintaining the hypothetical "let's pretend this rule applies" is not something our Type-1 thinking naturally does for us, it requires conscious thought and engaging our working memory.

Serial associative cognition is less important for our discussion here and is most easily understood in contrast to decoupling. Basically not all conscious step-by-step Type-2 thinking is as demanding of working memory as decoupling and some thinking mistakes happen while we are type-2-thinking about problems in a not-sufficiently-decoupled way. Finally, it's important to remember that these reasoning tasks are set-up to trip people up and that slow effortful thinking with mental simulation is not automatically the best way to go.

With this “tri-partite model” of the mind Stanovich develops a "taxonomy of thinking errors". For example some mistakes simply stem from a lack of accessible knowledge about probability, others come from not detecting the need for type-2 thinking/decoupling, others from an inability to sustain decoupled representation in working memory for a long time, and other times we're being motivated not to think objectively about a problem. There are many ways to fail at thinking, some will depend on cognitive capacity and others wont. The Wason card selection task above for example is correlated with cognitive capacity while "myside bias" isn't. Sometimes the fact that a composite of these "cognitive reflection tests" relate to IQ is thrown at Stanovich et al as a gotcha (Hah! You have just made an imperfect intelligence test!) - in my opinion this is not the takedown people assume since the point of the model is to make sense of the details and the variability. A central question in this research has always been why people with similar cognitive ability often differ so much when it comes to biases and thinking. Cognitive capacity in other words can often be necessary but not sufficient for rationality.

To summarize: Our fast autonomous mind come with a certain set of cognitive biases that can be overridden when we think about things more carefully or step-by-step. Decoupling is a specific type of working memory dependent mental operation where we override our automatic response by creating second order representations to mentally manipulate. This enables intentional hypothetical thought about isolated features of a problem, and is crucial for rationality.

A stroll through the rationalist blogosphere's use of decoupling

I tried to google and backtrack links to understand how this term moved from academic psychology to the rationalist blogosphere. From what I've seen the online use of the term started with a blogpost from Sarah Constantine called "Do Rational People Exist" where she, citing Stanovich, concludes: Yes. Some people are more rational than others.

The cognitive reflection test is correlated with less cognitive bias and with IQ, as well as with forecasting ability. There’s a compelling case that “rationality” is a distinct skill, related to intelligence and math or statistics ability.

Further she cites evidence from Stanovich et al that:

Basically, it appears that lower rates of cognitive bias correlate with certain behavioral traits one could intuitively characterize as “reasonable.” They’re less dogmatic, and more open-minded. They’re less likely to believe in the supernatural. They behave more like ideal economic actors.

In this post she also describes decoupling:

Cognitive decoupling is the opposite of holistic thinking. It’s the ability to separate, to view things in the abstract, to play devil’s advocate.

And bring up the enticing idea of a "hyper-rational elite":

Speculatively, we might imagine that there is a “cognitive decoupling elite” of smart people who are good at probabilistic reasoning and score high on the cognitive reflection test and the IQ-correlated cognitive bias tests. These people would be more likely to be male, more likely to have at least undergrad-level math education, and more likely to have utilitarian views. Speculating a bit more, I’d expect this group to be likelier to think in rule-based, devil’s-advocate ways, influenced by economics and analytic philosophy. I’d expect them to be more likely to identify as rational.

The post can be summarized as a research-dump relating to rationality. She doesn't really get anything wrong, as far as I know, but I think there are seeds here of something we will return to later: self-identification with rationality as evidence for ones rationality.

Lucy Keer/drossbucket then reacted to Sarah's post some years later. First in a post called "The Cognitive Decoupling Elite", which is a personal musing about what it's like to not be part of that elite when a lot of your friends are. She also reflects on the other side of the coin, contextualizing. She writes about the unpleasantness of cognitive decoupling in a way I sympathise with and that seems true to it's origin as a working memory dependent operation. "It’s certainly very plausible to me that something like this exists as a distinct personality cluster," she writes.

This post seems to have gotten more engagement than she expected, according to her follow up post. When I look around it seems people more commonly link to her first post, which is a shame since she later describes it as a bit "confused" and the follow-up post dives into what decoupling is in a more detailed way.

When I picked this up, I was mostly excited by the one bit of speculation at the end, and the striking ‘cognitive decoupling elite’ phrase, and didn’t make any effort to stay close to Stanovich’s meaning. Now I’ve read some more, I think that in the end we didn’t drift too far away.

Between Lucys first and second post John Nerst wrote a long and influential post that used expression. Around this time Ezra Klein was fighting with Sam Harris about the platforming of Charles Murray on Harris's podcast. I really don't want to get into the details of that debate but for John Nerst this was basically Christmas Eve. His blog Everythingstudies is largely devoted to what he has dubbed "erisology", the study of disagreement, and he's supposedly writing a book about it (I think that sounds like a cool project and wish him good luck). He sees both Harris and Klein as smart, honest and reasonable actors in the public discourse, which meant this was a goldmine for understanding high-level disagreements!

John uses the distinction between high-decouplers and low-decouplers to make sense of why Klein and Harris disagree. He does not see this as the only reason, but he clearly frames it as central.

To a high-decoupler, all you need to do to isolate an idea from its context or implications is to say so: “by X I don’t mean Y”. When that magical ritual has been performed you have the right to have your claims evaluated in isolation. This is Rational Style debate.

(…)But Klein is a journalist, and as that he belongs with the literary intellectuals. To him, coupled ideas can’t just be discharged by uttering a magic phrase. The notion is ludicrous. Thinking in moral and political terms is not a bias, it’s how his job works and how his thought works. Implications and associations are an integral part of what it means to put forth an idea, and when you do so you automatically take on responsibility for its genealogy, its history and its implications. Ideas come with history, and some of them with debt. The debt has to be addressed and can’t be dismissed as not part of the topic — that’s an illegal move in Klein’s version of the rules.

This post seems to have attracted a lot of attention and I think it's probably the catalyst for the widespread use of the "high-decoupler". Putamonit picks it up and connects it to the conflict/mistake theorist distinction. More talk about the concept starts to happen on reddit. Later there’s a Quilette article about it. Decoupler/contextualizers become the preferred terminology since it sounds less hierarchical. Nerst writes a follow-up post a month later:

In retrospect, I was somewhat careless to articulate this the way I did. I blame seduction by an elegant narrative and the fact that I had no idea it would attract as much attention as it did. If I’d known I think I would’ve phrased it differently. In reality things are a lot more complicated than just “some people will decouple things and others won’t, and these types tend to self-select into different professions”.

(I will return to the follow-up later)

Seduction by an elegant narrative

In my experience eugenics seems to be a lightning-rod for someone online using the term "high-decoupler". In 2020 Richard Dawkins got some heat for tweeting:

"It’s one thing to deplore eugenics on ideological, political, moral grounds. It’s quite another to conclude that it wouldn’t work in practice. Of course it would. It works for cows, horses, pigs, dogs & roses. Why on earth wouldn’t it work for humans? Facts ignore ideology."

By 2020 the term has escaped half-obscure blogs and reddit posts. Tom Chivers writes in Unheard (citing Nerst) related to this controversy:

"I think a lot of arguments in society come down to this high-decoupler/low-decoupler difference. And while I hope I’ve done a good job of putting the case for low-decoupling, I am very obviously a high-decoupler, so often I find myself thinking “but they performed the magic ritual! They said they didn’t mean Y!” and being really confused that everyone is very angry that they believe Y."

In my opinion the use of the word decoupling has gradually shifted. The New Decoupling is a bit of a mixed bag, but it is rarely talked about as an effortful mental operation. Instead it's more of a stable, if culturally influenced, personality trait. My impression is that high-decouplers:

Accept the magic ritual, like Chivers described above. (Rational Style Debate)

Focus on things literal objective meaning without bringing in a bunch of additional context. Basically being detail-oriented. Isolating ideas from ideas.

Are willing to play devils advocate and think controversial ideas (because they decouple their implications from the ideas themselves).

Think more abstractly.

Are more rational and scientific than others.

I think there's two problem with this new version of high-decoupler as a personality trait. First of all it's connection to the mental operation seems kind of weak. This New Decoupling seems less about overriding an automatic Type-1 response and more about isolating things from their context. This distinction may seem subtle - like who cares if the isolating takes effort or not? - but I think it's important. A core reason the mental operation of decoupling is connected to rationality is because it goes against our natural tendency to be fast and lazy cognitive misers. A high-decoupler in the Stanovichian sense is someone who detects when to override his Type-1 response and has the capacity to do so.

The more complex often politically charged situations where I've seen "high-decoupler" used are usually very different from the deceptive experiments described above. Think for example what it means for Dawkins, a biologist, to say that human selective breeding would work. I can't read his mind but I imagine this is simply something he believes to be true; it is an opinion that's readily available to him. If I were to ask him whether it'd work, the first thought that hits him is probably "sure, why wouldn't it?" If I caught him in a more disinhibited moment he'd be more likely to simply answer that, without any extra qualifiers. Entertaining that hypothetical doesn't load up his working memory. He wouldn't land in another belief if he was forced to go on autopilot. Just because he's seemingly "playing devils advocate" doesn't mean he's a high-decoupler. (One could even argue that slowing down and thinking more about how he should phrase his point would be the high-decoupler move here).8

It wasn't Dawkins who was using the word, it was Chivers. Even so I think Chivers accepting of the magic ritual "I didn't mean Y" comes effortlessly for him, since he states he's confused about why everyone is angry. In many cases whether entertaining a hypothetical feels reasonable probably comes down to whether you trust the person who's speaking and whether the issue they're talking about is sensitive to you. When people are reluctant to engage with a presented premise it is often incorrect to suggest they're either unable to detect the need for decoupling or unable to sustain a decoupled second-order representations. Instead their unwillingness often has to to with an accurate awareness of someone's rhetorical goal. Where are you going with this?

One recent example of this went viral on TikTok. A right-wing content creator confronts a random passer-by with a hypothetical:

- LGBTQ rights or economic stability?

- Why can't you have both?

- You need to pick one.

- Refuse the question.

- You can't refuse the question.

- I do.

- But you can't.

- But I did.

The conversation goes on like that. The guy briefly became a celebrated meme, the "Master Debater". In a way it would make sense to say he’s a “low-decoupler” since he’s not engaging with the hypothetical. But it’s also obvious that this has nothing to do with either his ability to decouple (in the Stanovich sense) or rationality more broadly. He just knows that the framing is not neutral.

The Master Debater example may be a bit extreme but its absurdity neatly illustrates my point. This new version of "high-decoupler" is not simply a person who adaptively is able to override his Type-1 thinking to solve reasoning problems. Instead it's more like someone who agrees to play ball and accept the premises when it comes to thought experiments. Since it is no longer about overriding bias, the connection with rationality seems weak (to say the least).

I'm not alone in noticing this shift in meaning. When I was 7000 words deep into writing this post I noticed Sam Atis had actually pointed out exactly this difference between the academic use of decoupling and the "discourse version" of it in 2022, and applied it to the Harris-Klein debate as well as Chivers article! (Ah well...)

Klein is almost certainly capable of accepting the Discourse-Decouple (and probably has a high ability to Academic-Decouple), but he believes there is a moral duty not to let the conversation go that way, in the same way that I believe there is a moral duty not to let a eugenicist Discourse-Decouple the moral argument against eugenics from the question of whether eugenics is possible in a public debate.9

Secondly, even if we loosen the definition of decoupling regarding "overriding automatic responses" and working memory dependent representation, being a high-decoupler doesn't necessarily seem more rational. Although before we do that, let's be clear that working-memory dependence is not some minor detail in Stanovich's conception of Type-2 thinking. He frames it as the central difference between Type-1 and Type-2 thought across all dual-processing theories (Evans & Stanovich, 2013). Even so, it's also easy to imagine how being too decoupled, too focused on isolated details and insensitive to context, is a possible failure mode. Rebecca Brown points this out in a response to Chivers (and Nerst) which I warmly recommend:

In some instances, contextualising (not decoupling) looks like ecological rationality and attention to higher-order evidence in action.

When people communicate, the meaning of what they say can shift depending on contextual factors including the tone they choose, their identity, the audience they are talking to, the things they choose not to say, and so on. Humans are highly sophisticated communicators and can take account of such factors without needing to explicitly recognise that that is what they are doing. If a member of the Taliban says “it is perfectly acceptable for women to remain in domestic positions rather than seek paid employment outside the home” it is likely to be interpreted very differently from the same sentence spoken by someone who has campaigned for women’s rights their entire life. Recognising not just the narrow, decontextualised meaning of a communicative act, but the meaning the speaker intends to convey (albeit indirectly via subtext, dog-whistling, nudges and winks) requires contextualising.

She points out that contextualizing is often necessary to include higher order evidence, evidence about your evidence.10 An argument can be structurally valid but still weak/wrong. And an argument can be valid and based on true premises, but still clearly incomplete. One important aspect to contextualize in a lot of communication is whether a source has a clear motive, plausible bias, or conflict of interest. Even if a biased source states things that are "technically correct" it is likely to give an incomplete picture of an issue. A "high-decoupler" could easily extend undeserved credence in those situations.11

This version of decoupling seems to be not-obviously-rational in the sense that it doesn't acknowledge a lot of communication that happens. As Rebecca Brown says, "subtext, dog-whistling, nudges and winks".

"The BBC and Russia Today are both state funded media and both are invested in advancing their nations respective propaganda narratives."

The statement above is in one sense true, right? And it doesn't include anything about them being equally bad. Or even similarly bad. But if I were to come across such a statement online a decoupled literal technical interpretation would be missing something important. The contextualized interpretation that whoever wrote it is probably intending to equivocate is far from irrational. (If you want a deep dive into someone who seems to constantly equivocates like this while also denying that that is what he's doing, you can listen to Decoding The Gurus episode on Chomsky - especially the part about his Ukraine comments). Now I know that searching for dog-whistles and subtext can easily go too far. It potentially opens up the floodgates for politically partisan bias, turning everything said into projective Rorschach tests where people imagine far-fetched nefarious agendas. But insinuating that processing that subtext is itself a failure of reasoning means knee-caping our interpretive machinery. Surely there's a balance.

Finally, to sort of bring these two points together, sometimes overriding your automatic Type-1-response means intentionally bringing in additional context. Whether an override entails decoupling-in-the-sense-of-isolating or contextualizing depends on what your autonomous response is in a given situation. It is true that a fundamental bias of Type-1 is to be too contextualized, associative, and connected, but this only applies to already learned connections. There are many situations where bringing in new context is the thing you have to make an effort to do in order to think clearly about complex problems.12

I think one example of this could be different variants of egocentric bias. Rationality as an ideal is often framed as something sort of "cold" and unemotional. Of course there's some significant truth to that, you don't get motivated reasoning without emotion. Still I think it's also worth to remember that our Type-1 thinking also can bias us to not empathize and thus to not feel. Empathy, identification, and emotion tends to come easily when dealing with stuff one has personal experience with. People who are unlike us, with life-stories unlike ours, are easily simplified in our mind not out of some hatred but simply out of lack of easily accessible information and our tendency to be cognitive misers. Because of this I think the YA author John Green's wholesome call to "imagine others complexly" can be seen as a rationalist mantra. Take a step back. Think slower. Is there some more context here I could be missing? Hypothetically, how would I feel if that had happened to me?

I bring this up partly because I think the unemotional equals rational connection is kind of questionable, but also because willingness to engage in different devils-advocate-hypotheticals I think relates to what an issue means to you. Nerst writes that high-decouplers are mistaken as unempathetic, but sometimes it actually is the case that you're not making an effort to empathize or understand. Neglecting that does not make you more rational.

To summarize: When bloggers started using the word decoupling it's meaning shifted from being a working memory dependent simulation that overrides our Type-1 processing, to mainly being about isolating things and engaging in thought experiments (plus some associated stuff). There was also a clear shift in focus/emphasis from decoupling as a cognitive operation to the differences between high/low-decouplers. This shifted meaning means "decoupling" is no longer clearly connected to epistemic rationality.

What makes the New Decoupling a sticky meme.

The use of "high-decoupler" suffers from trying to apply a model built around slip-ups in reasoning that happen in a controlled research context so a much more messy world. It is perhaps not surprising that the term mutated. Still, to use the meme-gene-metaphor, I don't think it's a coincidence that some mutations survive better than others. Mutations live on if they increase the organisms adaptation to the environment. For a meme that environment is the human mind (in a particular culture). The new version of decoupling/high-decoupler is broader, more easily brought out in more situations, which probably facilitates it's spread.

This is all of course highly speculative, but it also seems to me people were clearly more attracted to the idea of the "high-decoupler trait" and what that trait was related to, rather than what decoupling does. Indeed, the same thing happens twice, with both Lucy Keer and John Nerst, where they first use the term to differentiate between different types of people and then write a follow up post trying to nuance things for a bit by focusing on decoupling more mechanistically. From what I can tell their first posts seem to have had a larger impact.

Identity is important to people, so a term people can identify with is interesting, especially if that identification comes with a sense of high status or self-worth. Sarah Constantine's original post focuses a lot on which things being a high-decoupler is, or might be, associated with. There's nothing wrong with that in and of itself, I find that interesting too, but I don't think it's incidental. If the post instead had asked "when are we usually irrational?", or "what happens in the mind when we fail to think rationally?", I bet it would have attracted less traffic. The most interesting question is whether some people are more rational, and I think a lot of that interest comes from the idea that "it could be me!"

I don't want to come off as meanspirited (this was the magic ritual, you are now not allowed to take offense) but it's easy to see how viewing yourself as a high-decoupler can be a convenient psychological defence if someone calls you insensitive. The guilt you feel can easily be deflected by an assurance that you're merely a more rational person than they are. Their faulty emotional low-decoupler mindset made them tell you some bullshit about "context". Nevermind, you're better than that.

Sarah Constantine's post contains an affirmation that viewing yourself as rational means you're more likely to be rational. I think many of the people who now use "high-decoupler" haven't read her, or Lucy Keer, or John Nerst, but the idea that decoupling is connected to rationality seems to come bundled with the term. I think this rationality connection, together with the broadening is what has made the new version of decoupling a sticky meme.

This Is A Trap! If you are interested in epistemic rationality, in forming unbiased true views and opinions, one of the worst things you can do is give yourself a convenient way to dismiss people who disagree with you! And "I am a more rational high-decoupler" is a flexible tool indeed. Stanovich would call this "corrupted mindware", rules for thinking that actively hinders rationality. I don't expect it to affect performance on something like the experiments above, but it's easy to see how it would be at odds with "actively openminded thinking". The reflective mind is ultimately concerned about goals, and it’s easy to see how the goal “feel good about myself” can conflict with the goal of rationality.

Maybe you can handle it? Maybe you specifically are able to think of yourself as fundamentally more capable of rational thinking than others without it affecting your judgement? If I were you I wouldn't let that thought in. To me rationality is fundamentally connected to being suspicious of yourself - your initial hunches are often simplistic, your ability to think objectively is often compromised. Learning to think means learning to let in new information, even if it feels unfamiliar and painful.

I get if this sounds overly cynical, maybe the distinction between high/low-decouplers is simply memetically successful because it's a good tool for understanding disagreements? And surely that is part of it. However, I've noticed that the people who use the term mostly tend to call themselves high-decouplers and others low-decouplers. We don't want to imagine ourselves as more irrational than others.

"Cognitive decoupling is what scientists do."

Is this New Decoupling a worthless concept?

My initial idea for this post was titled "that's not decoupling". The angle I was going for was that people are basically using the word wrong. But linguistic prescriptivism is almost always hopeless. Just look at how the online use of "gaslighting" has gone from a specific type of psychological abuse within close relationship that serves to undermine someone's trust in their own sanity and judgement, to basically just being synonym with "lying" (except like extra toxic). There's something about this that I find distressing. Like the collective architecture of online discourse itself replicates the fundamental computational biases of Type-1 processing, dooming us to only ever talking about imprecise vibes.

But the way people now use "decouplers/contextualizers" or "high/low-decoupler" still means something! It means something else and I hope I've made clear how this shifted meaning has (more or less) severed the connection to rationality that existed in the more specific use of the term within the research. But is this new, more nebulous, meaning nonsense? Worthless for understanding disagreements? I don't think so. It's clearly grasping at a real thing. I've sort of skipped over John Nerst follow up post since I think it was less influential in spreading and forming the meme of decoupling. In his follow-up he partly codifies the shift in meaning and tries to make the best of the situation:

It’s not quite right to frame decoupling as simply a skill the way Constantin (and Stanovich?) does. At least not the way I think of decopuling — which, admittedly, is probably broader. While there is some asymmetry in that abstract and hypothetical thinking don’t come naturally to humans but have to be learned, the difference between decoupling and non-decoupling isn’t purely or even mainly about ability. In many cases, the explosive ones like Harris-Klein especially, it’s more a matter or opinion which approach should be used.

He introduces a prima facie plausible model for when people decouple, admitting that it's not simply a personality trait. Suggesting that decoupling can come down to 1. ability to think abstractly, 2. lack of ability to contextualize, 3. disposition, 4. opportunism, 5. local cultural norm. He also notes that: "If there’s a plausible threat further down the line, you’re going to contextualize more."13

The follow up focuses more on decoupling-in-the-sense-of-isolating as a thing that happens in disagreements instead of just a difference between people. That doesn't mean differences between people don't matter at all. Some people really are more "isolating" and less sensitive to context, some people really are used to different cultural norms around thought-experiments. I think being attentive to that could be useful, but not without balancing it with an awareness of how decoupling can be used opportunistically, and how it often comes with an undeserved air of rationality.

And we shouldn’t get ahead of ourselves just because the concept feels useful. There are a lot of unanswered questions regarding Nerst’s new broader meaning of decoupling, and it’s worth to remember that with this shifted meaning you can no longer just look at studies about correlations between “cognitive reflection tests” and other things to learn about it. The most central question to me would be the degree to which this version of decoupler/contextualizer is a stable trait and the degree to which it depends on the particular situation. (If it’s somewhat stable I’m also curious about how it relates to to the idea about lumpers and splitters? Or autistic traits? Or being interested in analytical philosophy? Or the way you approach art?)

There's something ironic here I can't help but to point out: The version of decoupling that spread within the rationalist blogosphere comes of as somewhat impressionistic, associative, contextualized. It is understood through representative exemplars and then hastily used as a tool for analysis. Bloggers cite bloggers that cite research. Immediately using the concept seems more fun than investigating it. This process contrasts Stanovich's vision of rationality as slow, careful, unbiased information acquisition quite a bit. Instead I think there's "satisfising" in a similar way we see on the classical reasoning tasks. Something close-enough gets substituted for the real thing. The impression of the concept feels useful, so we roll with it. It's a low-decoupled version of decoupling.

Perhaps that's too uncharitable (or even smug). I'm basically just some guy who read a couple of books some years before anyone started talking about this expression online, so I shouldn't frame myself as an expert. I also don’t see Keith Stanovich as the ultimate authority on all things rationality, but I think there’s something fundamentally true and useful about the main ideas in this literature, and since it’s his model the term got it’s connection to rationality from I thought it made sense to keep his framing. I think my reading of the model is accurate enough but I'm open to being wrong.

But I think I'm right. If people are going to keep talking about decoupling (which they are) I think spreading awareness between the significant differences between Academic-Decoupling and Discourse-Decoupling could bring a lot of clarity. And I hope I've convinced you that if you're going to keep using "high-decoupler", please don't assume it means rational, objective, reasonable or smarter than others.

References:

Academic:

Evans, J. St. B. T., & Stanovich, K. E. (2013). Dual-Process Theories of Higher Cognition: Advancing the Debate. Perspectives on Psychological Science, 8(3), 223-241.

Kahneman, D. (2011). Thinking, Fast and Slow. Farrar, Straus and Giroux (FSG)

Stanovich, K. E. (2004). The Robots Rebellion: Finding Meaning In The Age Of Darwin. University of Chicago Press.

Stanovich, K. E. (2011). Rationality And The Reflective Mind. Oxford University Press.

Stanovich, K. E., & West, R. F. (1998). Individual differences in rational thought. Journal of Experimental Psychology: General, 127(2), 161–188.

Stanovich, K. E., West, R. F., & Toplak, M. E. (2013). Myside Bias, Rational Thinking, and Intelligence. Current Directions in Psychological Science, 22(4), 259-264.

Blogposts/articles:

Do Rational People Exist - Sarah Constantine (2014)

The Cognitive Decoupling Elite - Lucy Keer (2018)

A Deep Dive into the Harris-Klein Controversy - John Nerst (2018)

The Context Is Conflict - Jakob Falkovich (2018)

Decoupling Revisited - John Nerst (2018)

Cognitive Decoupling And Banana Phones - Lucy Keer (2019)

‘Eugenics is possible’ is not the same as ‘eugenics is good’ - Tom Chivers writing for Unheard (2020)

Decoupling, Contextualizing and Rationality - Rebecca Brown (2020)

Decoupling as a Moral Decision - Sam Atis (2022)

People often attribute these terms to Daniel Kahneman, who no doubt has been even more of a giant in the field, but I always find this misattribution weird since Kahneman clearly attributes the terms to Stanovich and West when introducing them in the first part of the book, see page 49 of Thinking Fast and Slow. Did people just pretend to read this book?

The Robots Rebellion from 2004 has a nice overview of different examples (page 35). See also Evans & Stanovich (2013) for a defence of these types of theories generally and a description of their lowest common denominators.

The other fundamental biases he suggest are the tendency to socialize problems even when there are few interpersonal cues; to see deliberate design where there is none; and an excessive tendency towards narrative thinking. For our purposes here contextualizing is most important, but these biases are also suggested to be mutually reinforcing and possibly arising from some more basic mode of processing information (page 110-113 in The Robots Rebellion, Stanovich 2004).

For example I think that the original Linda problem had more options to mislead people and lull them in to a Type-1 thinking. Gerd Gigerenzer has spent a large part of his career arguing that these biases are actually reasonable, “ecologically rational” given the constraints of time and energy we have.

In the literature there's also a distinction between epistemic and instrumental rationality. Here I'm only concerned with epistemic rationality.

Stanovich uses the term fluid intelligence here, but he also constantly refers to the relevance of working memory, and justifies the focus on fluid intelligence with it's correlation with working memory. I'm a bit confused about why he doesn't just let the algorithmic mind in his model stand for working memory capacity honestly, but I’m sure there’s a reason I’ve missed. I think “cognitive capacity” works well as a term for the purposes of this post.

Stanovich maintains that it relates to intelligence-as-knowledge but that the knowledge relevant for rationality usually isn't covered on intelligence tests. One of his books is called “What Intelligence Tests Miss”. I think he’s sort of unpopular in the IQ-fandom because of this.

The highest decoupler move is to never tweet ever.

I wrote out abbreviations to make “A-decouple” and “D-decouple” more readable

Rebecca Brown connects this to a wider critique of whether system 1/type-1 thinking is even meaningfully biased, citing Gigerenzer’s argument that heuristics are useful under ecological conditions. I don't think this part of the critique is necessary for my point here since I argue that this new meaning of decoupling (and contextualizing) is different anyway.

I sometimes find myself observing and sometimes (unfortunately) arguing with climate change deniers online and I find it amazing how utterly uninterested they are about whether their source is funded by the Koch brothers. I don’t think that’s because they’re “low-decouplers”, they’ve just picked a side and are doing motivated reasoning, “decoupling” when it suits them and “contextualizing” when it suits them.

However, I think Stanovich would call this search for additional relevant context decoupling too since it overrides our Type-2 thinking to engage in intentional simulation. But it's not “decoupling-in-the-sense-of-isolating” that the looser use of the word implies.

Although I would bet that motivated reasoning actually goes deeper. If decoupling-in-the-sense-of-isolating feels like protecting from a plausible threat further down the line, then you'd probably chose that instead of contextualizing. But I agree there's an asymmetry.

Hey -- I used this as content for a video that I made criticising "rationalist" defences of Sam Harris. Thought I would let you know ( https://www.youtube.com/live/1FHVhu6PTL4?si=CmncnOGBnhpT5zT- )

Very nice! I remember the Harris/Klein podcast (I don’t think high/low decoupler is the best way to understand their disagreement).