Saying "priors" is fine actually

It's either not that bad or actively good

"I am updating my priors (beep boop)", can seem like a weird thing to say. I've previously been sympathetic to a critique of this expression that goes something like this:

People only say "priors" because it makes them seem more rational. Actually "priors" just mean beliefs/assumptions/expectations/schemas, so there's no reason to use the word. It is arrogant and cringe and evil!

I do think this is a potential problem worth taking seriously. If prior just means belief, why are you not saying belief? What are you trying to achieve by this obscurantism, huh?1

Use of the expression spread from people like Nate Silver teaching people to think probabilistically and Eliezer Yudkowsky advocating for his ideas about rationality. Basically an ideal rational agent can be modelled with Bayesian maths and some people advocate you should try to embody that ideal. I'm very much of the view that people want to see themselves as rational, especially more rational than others, and that this can be self-sabotaging. When it comes to epistemic rationality and critical thinking there's always a danger in stuff that inflates your ego. The overall human tendency is to think too much of yourself. Hubris is blinding (and annoying for those around you).

But I am also very much of the view that there exists something to learn about how to think better.2 Knowing some basic probability theory is a very useful tool for thinking clearly. The formalism around Bayes theorem can be hard to apply with any meaningful precision when it comes to my own fuzzy beliefs, but as an ideal it's fundamentally good. Hopefully saying "prior" instead of belief reminds people about some useful ways to think about your beliefs, such as:

1. Belief is not binary.

When you are asked "do you believe X?" The answer you're most expected to give is usually either a yes or a no. But beliefs aren't, and usually shouldn't be, absolute convictions. Some things you believe are more justified, others less. You could express this as degrees of confidence, 0 to 100. Philosophers had to invent the concept of credence to distinguish between this more fine-grained thing and binary belief/disbelief, so if this feels trivial to you I assure you it's not as trivial as it seems.

Bayesians argue that these beliefs/credence should also follow the laws of probability. This is by no means uncontroversial philosophically (to be fair, few things are), but for practical purposes the framework allows you to talk about things like calibration and internal consistency in a way that is very helpful and illuminating. The word "belief" itself does not automatically carry these undertones.

2. Evidence should always shift your confidence (at least a little bit)

An important implication of letting beliefs/priors follow the laws of probability, is that evidence should always affect your posterior probability. Say you are confident there are no ghosts, as in you think the probability of ghosts turning out to be a real thing is 1:100000. Then you hear about a famously haunted mansion that several of your close friends went to, and all of them say they saw a ghost. This should shift your probability with something. Maybe not a lot, the chance they're just playing a prank on you, or that something else is going on, should probably count for a lot. But it's not enough that another explanation is more likely. Unless you are 100% certain (1:∞) that something else is going on, your prior should shift. You never get to just say "nope" to evidence.

I picked the ghost example because I think it triggers some natural resistance, but if you firmly argue supernatural stuff is a special type of impossible, substitute ghosts for "mushrooms that can heal all illness" or something else bizarre. Indeed, I think Cromwell's rule isn't air-tight for limited sinful flesh-monkeys like us. Rounding to 0% can be OK. Sometimes. Rarely. But again, I think the usual tendency among people definitely errs in the other direction. Unceremoniously rejecting evidence that doesn't fit your worldview is a far more common failure-mode. The word "prior" can remind people that's verboten.

Does it matter?

So the question is: What effect is strongest? Does the trend of talking about priors lead people more to thinking they're perfect rational robots or does it remind them that beliefs are just shifting probabilities?

I now think the second one is a but more likely. It's hard to describe why I shifted my opinion on this. But my sense is that, unlike e.g. high-decoupler, "prior" is not a potent concept for sorting people into more or less rational. There's some social signalling around rationality that can maybe be bad, but there's also some real substance there! If someone steeped in online rationalist discourse comes across a person who clearly is thinking granularly about beliefs and probability, they're not going to look down on them or dismiss them for not using the p-word.

The third option is of course that it doesn't matter. Words may come with connotations, but those diminish and shift with repeated use anyway; using a different word for belief doesn't affect your thinking in any fundamental way. I think this is probably the most likely scenario. In that case it's also fine to say priors.

What about the likelihood?

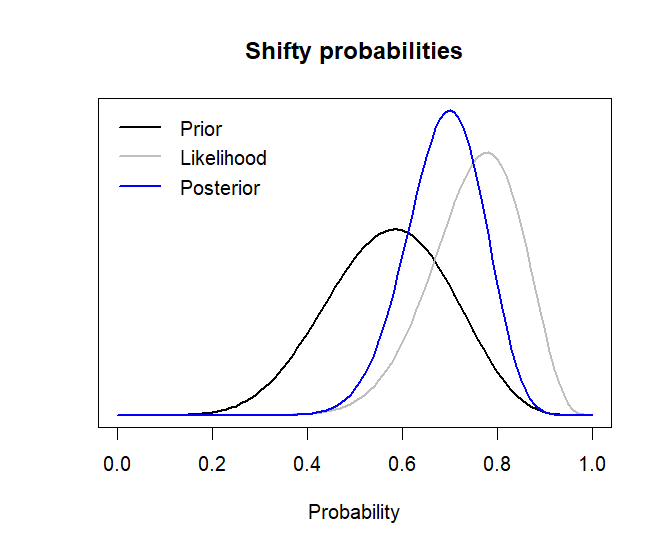

To keep it fair and balanced: There is one other thing with this talk about priors that bother me that I don't see brought up as often. When Bayes is introduced as a model for updating beliefs, one easily gets the impression that the big thing about disagreements are different prior beliefs in a conclusion. Person A has prior for a proposition that's 1:1 (50%), and person B has a prior 1:2 (67%). Both come across some evidence that can be expressed as a likelihood ratio 1:3. Person A should now update his prior to 1:3, and person B should update to 1:6. Often there's a picture of some distribution shifting like this:

But almost always the way disagreements play out are less about the prior plausibility, and more about how people judge the strength of the evidence. You can see this pretty clearly on the diverging probabilities in the Rootclaim debate on Covid origins.

Ah, but yes, the strength of the evidence is interpreted based on your priors!

Yes, but those are other, additional, priors than the prior probability of the proposition itself. When Bayesianism is sold as ideal rationality, it's always these simple toy examples where the likelihood is pretty much "raw data", or at least it’s very easy to say beforehand how much you think a specific piece of data should affect your probabilities.

How much something should affect your posterior probability is often much harder to get a good intuition for. Maybe that's just a me problem. Before Trump got into Office I was sketching on how to make a series of predictions about his term that would be correlated by a general factor (the General factor of Trump Terribleness - GTT). The predictions themselves would be a product of both this factor and some baseline probability, and if they came true they would update the estimate of the GTT, which would in turn affect the rest of the predictions. This was my way of making conditional predictions beforehand. I figured the common unknown for most predictions I thought of would be what type of guy Trump really is, and how well the rest of the US would restrain his impulses. It wouldn't make sense to treat them as separate predictions for the sake of calibration.

Anyway, other than me obviously having better things to do during Christmas season, the thing I got stuck on was how to specify the likelihoods that would update this prior3. Evaluating how much your priors should update is even harder than just quantifying your beliefs as probabilities.

I think the result, unfortunately, is that people consider what probability they feel comfortable ending up with given the new evidence and work backward from there. That surely undermines most of the point in doing things with exact numbers. Specifying that degree of conviction precisely and then bringing out a bunch of improvised stuff about how you should evaluate the strength of evidence doesn't really get you anywhere. (Although I'm also not sure just how close you should stick to Bayesian maths as a goal. I wrote some about this in my review of Everything Is Predictable, I don't think you can ever exorcise the informal part of critical thinking and replace it with numbers).

Conclusion

So I have some caveats about the disproportionate focus on the priors when presenting Bayes as an ideal. More generally, I think there's often a simplification of the challenges of epistemology. But the overarching principles are good, and I think saying "priors" may anchor people to those good principles.

With subcultural stuff like this there's always a risk of confusing effects from self-selection with something causal. In another era of the Internet there was a lot of talk about "logical fallacies", and I remember some voices argued that learning about a list of fallacies actually made people worse at logical thinking. Instead they're merely used as a tool to win debates, which then also poisons their mind. There is maybe a grain of truth in that, but it could just as well be a selection effect: The type of person emotionally invested in dominating and humiliating their opponents in debate — destroying their ass with facts and logic (as it were) — is probably going to be interested in learning about fallacies. More weapons for their arsenal. But did learning about fallacies make this 2008-guy obnoxious or was he just obnoxious from the start? Add to that that those guys are usually louder and more visible online and you get a spurious association between being a bad-faith debater and talking about logical fallacies.

I think something similar happens with the people who hate on people who say priors. They find a bunch of things about rationalists (etc.) cringe and annoying. Later causal stories about how the words they use make them bad and cringe naturally emerge, but I'm confident these people would find rationalists cringe no matter what words they use.

And the thing about cringing is that it's always a you problem. If you feel you need to destroy someone because they make you cringe I think you should: Stop feeling that. Resolve whatever hang-ups from high-school that makes you care about whether stuff is cringe and move on.

As to saying "I’m adjusting my priors”, I think it's fine, but “beep boop” is a bit much.

To be fair to the post I’m linking there: It contains a more sophisticated critique than my caricature.

I'm very frustrated by excessive relativism around biases and rationality. "Everyone is biased." Yup. Everyone is not equally biased. You should try to be less biased.

and vice versa, it doesn't have to be symmetrical.